Partnership with Snowflake

We’re proud to be part of the Snowflake Partner Network (SPN) Program, actively participating in their ecosystem and events. Our team continuously explores Snowflake’s latest features and helps clients design, deploy, and scale modern data solutions within the Snowflake environment.

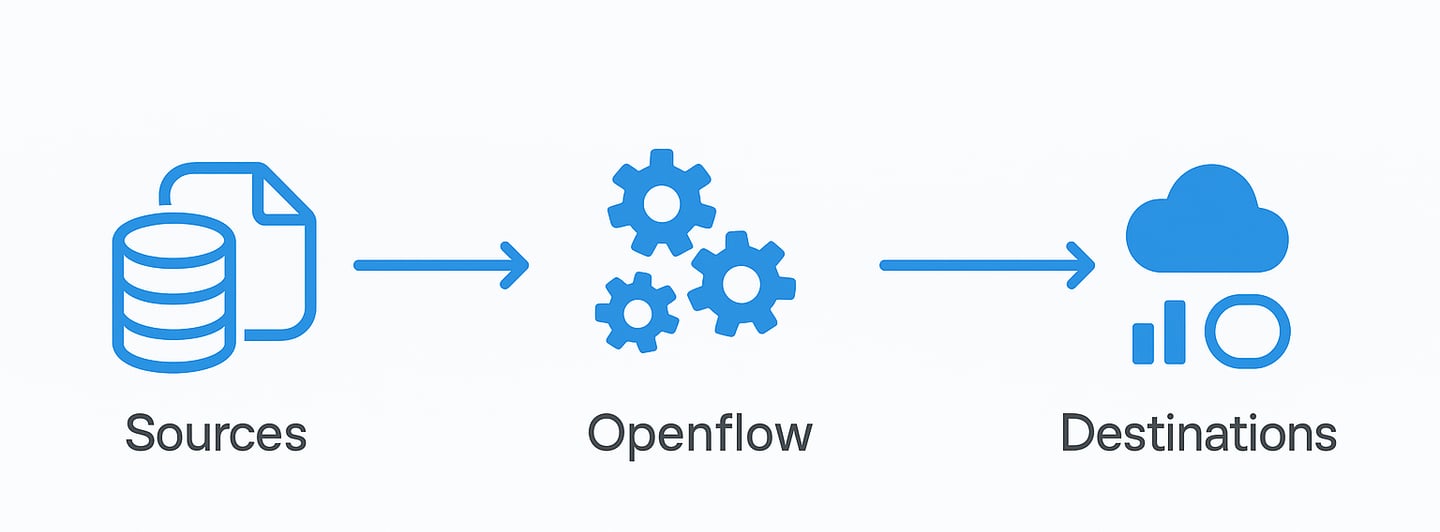

Snowflake Openflow is Snowflake’s modern data integration platform, powered by Apache NiFi, that enables seamless ingestion, transformation, and routing of data from any source to any destination. It supports batch, streaming, and multimodal data — including structured, semi-structured, and unstructured content like documents, images, and events.

Deployed in your own AWS VPC, Openflow provides full control with role-based security, observability, and isolation by team or environment. Its Bring Your Own Cloud (BYOC) approach allows enterprises to customize deployments, meet compliance needs, and integrate with private networks. With its scalable runtimes, intuitive UI, and deep Snowflake integration, Openflow simplifies building resilient, real-time data pipelines for analytics, AI, and enterprise data activation.

Snowflake Openflow:

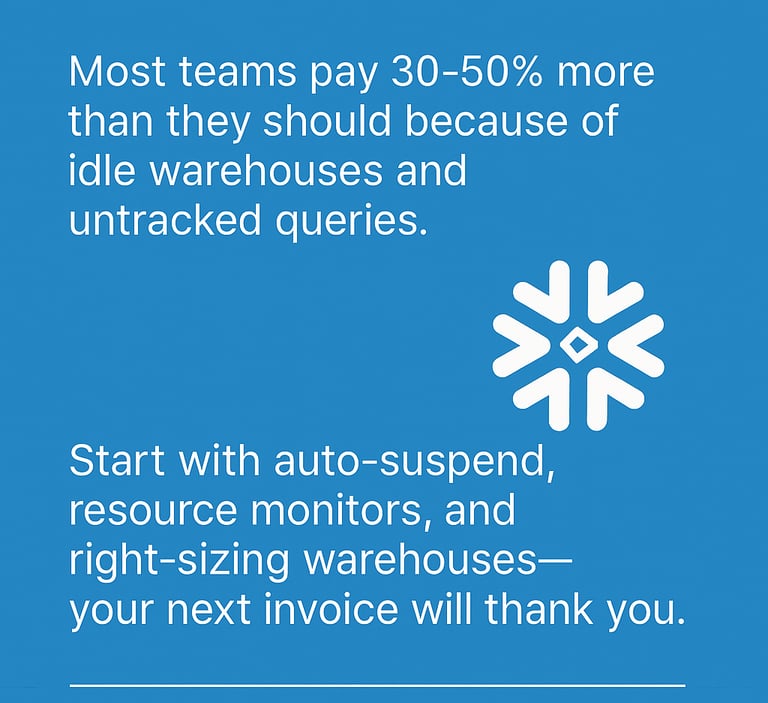

Cost Optimization & Monitoring Techniques:

Snowflake is powerful, but it can also become a silent budget drain. Many teams spin up new virtual warehouses, leave old ones running, or store terabytes of unused data without realizing it. Without a proper monitoring and cost optimization strategy, your cloud data warehouse can become the most expensive line item in your analytics budget.

What drives Snowflake costs up?

You’re not alone if you’ve received a shocking Snowflake invoice. Common mistakes include:

Over-provisioned warehouses running 24/7 for workloads that only need a few hours of compute.

Unused tables or stale data consuming storage costs month after month.

Untracked query usage from ad-hoc analysis or non-optimized ETL pipelines.

Lack of cost visibility across teams, leading to finger-pointing when invoices spike.

The worst part? Without a proactive approach, costs can double in just a few months as usage scales.

How to fix it?

Here’s how to take control of your Snowflake spend:

Implement Warehouse Auto-Suspend & Auto-Resume

Set warehouses to auto-suspend after 5–10 minutes of inactivity.

Use auto-resume to start compute only when queries need it.

This alone can cut compute costs by 30–50%.

Right-Size Your Warehouses

Start small and scale up only when query performance truly demands it.

Use Snowflake’s multi-cluster warehouses only for high-concurrency needs.

Leverage Query Profiling & Caching

Identify the top cost-driving queries using Snowflake’s QUERY_HISTORY and WAREHOUSE_METERING_HISTORY.

Encourage developers to reuse cached results instead of re-running heavy queries unnecessarily.

Use Resource Monitors & Budgets

Set resource monitors to alert or suspend warehouses when spend exceeds a threshold.

Allocate specific warehouses to teams for cost accountability.

Optimize Storage

Drop or archive stale tables and leverage Time Travel and Fail-Safe judiciously.

Consider automatic clustering only where performance gains justify storage cost.

Adopt a Cost Monitoring Dashboard

Tools like dbt metrics, Snowsight dashboards, or third-party cost monitoring tools provide near real-time insights.

Visualize spend by warehouse, team, and query pattern to spot trends before they become problems.

Use of Dynamic warehouse:

Dynamic warehouse techniques in Snowflake are a key strategy for cost optimization because they allow you to match compute resources to actual workload demand instead of paying for idle compute. Dynamic warehouse techniques ensure warehouses scale up or down, pause, and resume automatically based on workload. This reduces cost because you only pay for compute when it’s needed.

Auto-Suspend / Auto-Resume

Configure warehouses to suspend after X minutes of inactivity (commonly 5–10 min).

They resume automatically when a new query arrives.

Cost benefit: No charges while suspended.

Multi-Cluster Warehouses with Scaling

Instead of keeping a single large warehouse running:

Use a multi-cluster warehouse with min/max clusters.

Snowflake spins up additional clusters only when concurrency is high and scales down when idle.

Cost benefit: Pay only for extra compute during peak loads.

Right-Sizing Warehouses

Choose the smallest warehouse size that meets performance requirements.

Use larger sizes only for short bursts instead of leaving big warehouses running continuously.

Workload Segregation with Dynamic Scaling

Assign separate warehouses for ELT/ETL, BI dashboards, and ad-hoc queries.

Each warehouse can scale independently, preventing one workload from over-provisioning resources for others.

Conclusion:

Snowflake cost optimization isn’t a one-time task—it’s a habit. By combining automated cost controls with ongoing monitoring, you can enjoy Snowflake’s scalability without burning your budget.